Ars Technica — the awesome technical website — put together an equally awesome video interview with me about the making of Crash Bandicoot as part of their War Stories series detailing how various video games were created.

You can check it out here:

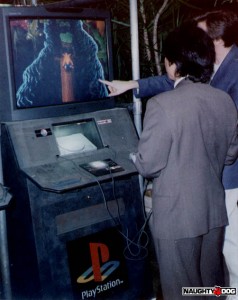

This interview gets into the nitty gritty of various problems we faced and overcame at Naughty Dog in our quest to realize the goal of making one of the first 3D Platform Action Adventure games. When we started, no one had ever made a Character Action game in 3D and we were forging boldly (insanely?) into new territory. We were young. 3D graphics were young. The Playstation was young. Gaming was young (or at least younger than it is now). So Jason, I, and the rest of the team had to figure everything out from scratch and just try to make the best game possible.

Speaking of teams, the one at Ars did an awesome job animating and editing my detailed story so that it actually makes sense!

And on 3/26/20 Ars cut a second episode from my footage for their “Extended Interview” series:

Check out the full Ars article here. or the second one here.

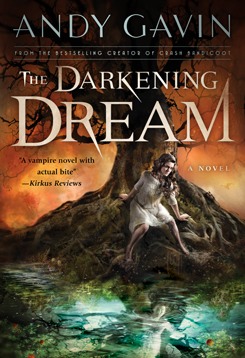

My novels: The Darkening Dream and Untimed The series begins here: Making Crash Bandicoot

If you liked this post, follow me at:

or the video game post depot

or win Crash & Jak giveaways!

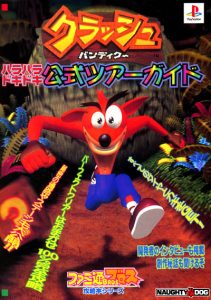

For more articles detailing the making of Crash Bandicoot, click here.

![Crash_Bandicoot_Spanish_Dvd_Slim_pal-[cdcovers_cc]-front](http://all-things-andy-gavin.com/wp-content/uploads/2012/01/Crash_Bandicoot_Spanish_Dvd_Slim_pal-cdcovers_cc-front-300x211.jpg)